Linear algebra focuses on Finite Dimensional Vector Spaces.

Standing assumptions for this chapter:

- denotes either or . We might use instead of the bolded version going forward as it is in line with LADR.

- denotes a vector space over

List of vectors: Lists of vectors do not need surrounding parenthesis; i.e. For a list of vectors in we do not use surrounding parenthesis: as opposed to .

Linear Combinations

Definition

The sum of scalar multiples of a list is called the linear combination of a list. Here is an example: Let . Notice these are vectors. Then a linear combination would be for .

Lets prove that is a linear combination of ; which is a list of two vectors in . Notice: For . This means we have the following set of equations: This is solved by and

Thus is a linear combination of .

Similarly, prove if is a linear combination of the list of vectors in :

Span

Definition

The set of all linear combinations of is called the span of denoted . Span of an empty list is defined to be

Another way to think about it is the span is kind of generated by the base set.

From before, we can see that

Condition

The span of a list of vectors in is the smallest subspace containing all vectors in that list.

Proof:

- Lets first prove that the span of a list of vectors is a subspace. Let :

- as

- Additive closure:

- Assume that we take two elements in : and for each

- The sum of these elements is as follows:

- as each the sum is also a linear combination of and therefore is within the span.

- Multiplicative closure:

- Assume for each and .

- Then

- As each the scalar multiple is also within the span.

- It is the smallest subspace containing

- Assume that we have a subspace containing

- For this, first notice that each . This is clear by, for each set to and the remaining scalars to . This leaves only which is shown as a linear combination of and therefore part of

- Thus, invoking closure by scalar multiples and addition, all the elements of the span have to be within the set. As each is within the set due to scalar multiplicative closure, and then the sum must also be in the set of all .

- We have already proves that the span is a subspace, thus as it must contain these elements this must be the smallest subspace.

Notation

If we have it so we say that spans

Example

Lets show that spans Proof: We need to show :

- Lets take . We can write this as:

- As can be written as a linear combination of , we know that it is within :

- Take some element and let for .

- Then notice

- Thus any element in the span is an element of

Finite dimensional vector space:

Definition

A vector space is called finite dimensional if some list of vectors in it spans the space.

From the example above is a finite vector space.

Polynomials:

Definition:

You already know what they are, but here is more of a formal definition:

- A function is called a polynomial if there exists such that:

- The set of all polynomials with coefficients in is denoted as

Prove that is a vector space over Proof:

- Additive properties

- Commutative:

- Let .

- Then let:

- notice:

- This additive commutativity is met

- Associativity: Proved through a similar process

- Identity:

- Notice that the 0 function is in by setting all the coefficients to .

- Inverses:

- Let

- Then we have:

- Commutative:

- Scaler Multiplicative Properties:

- Associatively:

- Let and

- Then

- Identity:

- acts as expected as it just leaves the coefficients as themselves.

- Associatively:

- Distributive Laws:

-

- Trying a summation approach

- let and .

- Trying a summation approach

-

- Similar to above

- Similar to above

-

Important

If a polynomial is represented by two sets of coefficients, subtracting one representation from the other produces a polynomial that is identically zero as a function on and hense has all zero coefficients. We will prove this in chapter 4

This means that the coefficients of a polynomial are uniquely determined by the polynomial.

Degree definition

is the highest power with a non-zero coefficient. More formally:

if there exists such that and Then this is a polynomial of deg n.

The polynomial has deg of .

Degree polynomial set notation

is the set of all polynomials with at most degree m and coefficients in .

In the definition above the at most is extremely important, showing that

It is easy to see that . These are elements of because each represents a function in the set, i.e you can get each by setting that specific coeficient to 1 and other ones to 0. And by definition the linear combinations of the functions create .

Thus. by this, is a finite dimensional space.

A vector space is called infinite dimensional if it is not finite dimensional, i.e. if there are no list of vectors within the vector space that span the vector space.

is finite dimensional

Proof: Take any list of vectors within , and assume that the largest degree of an element within this list is . Then we can not represent within this list, thus it can not span all of .

Linear Independence

Definition

A list of vectors is called linearly independent if the only way to get is by setting all . (every coefficient is zero.)

The empty list is declared to be linearly independent.

This is similar to the determination of direct products.

Important Colirary

is linearly independent each vector in has only one representation as a linear combination of . Proof:

- is linearly independent each vector in has only one representation as a linear combination of .

- Assume is linearly independent, then we have it so that if every is set to .

- Now assume, for the sake of contradiction, there are two ways to represent the element . Then we have it so

- Now we know subtracting the two gives us ; thus:

- Remember that is linearly independent. Thus, every coeficent is equal to 0, and for each

- By this, we have that each and the two representations are actually the same

- is linearly independent each vector in has only one representation as a linear combination of .

- Assume each vector in has only one representation as a linear combination of .

- Setting each coeficent to 0 gives us 0. By definition this can be the only way to represent 0, thus is linearly independent.

Examples of linear independence:

- Lets prove are linearly independent in

- To prove this, we have to show that the only way to get as a linear combination is by setting all the coefficients to zero.

- Notice the following:

- This means that:

- The only way for this to happen is . Thus this list is linearly independent.

- Prove that the list is linearly independent in .

- The zero function has to move all inputs to zero. This is only possible if:

- Thus this is true. (I let notate the zero function its cooler)

- A list of length 1 in a vector space is linearly independent it does not contain 0.

- Makes sense, as any coefficient scaler multiplied to the 0 vector will equal to 0

- The only way for another vector to be equal to 0 is if it is multiplied by the 0 scaler.

- A list of 2 in a vector space is linearly independent one of the vectors in the list is not a scaler multiple of the other

- This makes sense, because if they are a scaler multiple you can create a linear combination with 1 and the multiple amount as the coefficients. I.E

If some vectors are removed from an linearly independent list, the rest of the vectors are still linearly independent

- This makes sense because if this was not the case the set with the removed vectors would also be included, as they are all just set to zero so they do not really impact the sum outcome.

Linearly dependent definition:

- A list of vectors is called linearly dependent if it is not linearly independent.

- In other words, you can write 0 as a linear combination without having all the coefficients equal to 0.

Lets do an example:

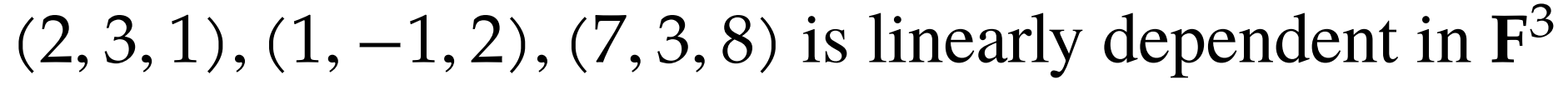

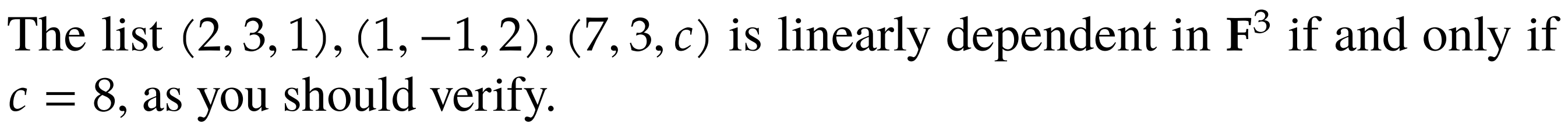

Prove the following:

#todo after you relearn RREF

#todo after you relearn RREF

#todo similar

#todo similar

Important things to notice

If some vector in the list is a linear combination of other vectors in the list, then the list is linearly dependent. Proof:

- This clearly holds, as you can just add up the linear combination and set that one vector to -1 in order to get 0.

Every list containing 0 is linearly dependent proof:

- The coefficient in front of that list can be anything, does not have to be 0, you can still write 0 out as a linear combination.

Linear Dependance Lemma

Suppose is a linearly dependent list of . Then there exists a such that: Additionally, if satisfies the condition above and the th term is removed from then the span of the remaining list equals . Meaning it does not change the span.

Proof:

- Proof that .

- Let be a linearly dependent list of . That means there are ways to write where the coefficients are not zero.

- Thus:

- Let be the largest coefficient which is not zero, i.e you have:

- Then, you can write as a linear combination of

- Thus, as it can be written as a linear combination of , we know

- Proving the second part of the theorem:

- notice that we have it so

- Removing does nothing to change the as it can still be created by the remaining vectors left on the list. Therefore, we just replace with which works as .

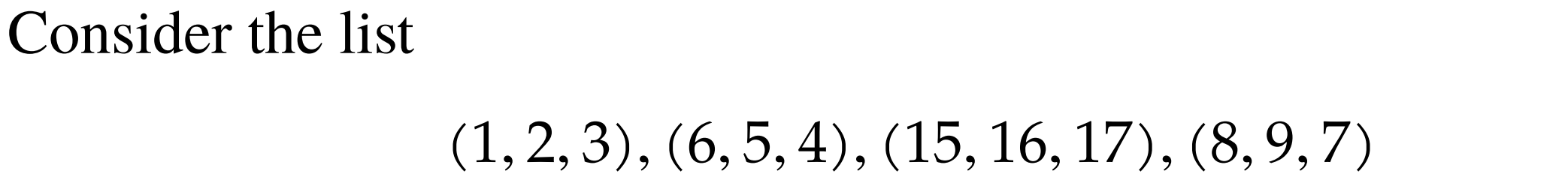

#todo for each show if the linear dependance theorem holds. Stop when you find the smallest k for which it holds.

#todo for each show if the linear dependance theorem holds. Stop when you find the smallest k for which it holds.

Length of linearly independent list length of spanning list

In a finite dimensional vector space V, the length of every linearly independent list of vectors is always greater than or equal to the length of every spanning list of vectors.

Proof:

- Let be a spanning list of vectors for and let be an independent list for

- Lets move to the spanning list. The list is now linearly dependent as due to it being a spanning list. As as we defined it to be a part of an independent list and any list with 0 is dependent; there must exist such where . Remove this so that the spanning list is still of length, and the span does not change by lemma.

- Keep doing this with ever in order. you will, by definition, always need an to remove as the list is always linearly dependent, or the list just becomes which is also a spanning list in that case. Hence the spanning list is either as large as, or larger, than the independent list. .

Every subspace of a finite dimensional vector space is finite dimensional

Proof:

- Assume is a subspace of some finite vector space

- Now, the next step is repeated. Find some such that (The previously picked vectors). Then add it to the list. Stop when there are no such left. As none of the previous vectors span the next ones, the list is linearly independent by the linear independence lemma. And, as all the elements with are now inside the span, this is a spanning list. Additionally, as this is also an independent list in it can not be longer than any spanning list of , so it is finite.